Online Cluster¶

Infrastructure¶

During beam time, exclusive access to a dedicated online cluster (ONC) is available only to the experiment team members and instrument support staff.

Note

Each of the online cluster has 4 Intel Xeon E5-264 v4 CPU @ 2.4Ghz with 10 cores per cpu, for a total of 40 logic cores, and 256 GB of memory

European XFEL aims to keep the software provided on the ONC identical to that available on the offline cluster (which is the Maxwell cluster).

SASE 1¶

Beamtime in SASE 1 is shared between the FXE and the SPB/SFX instruments, with alternating shifts: when the FXE shift stops, the SPB/SFX shift starts, and vice versa.

Within SASE1, there is one node reserved for the SPB/SFX experiments (sa1-onc-spb), and one node is reserved for the FXE experiments (sa1-onc-fxe). These can be used by the groups at any time during experiment period (i.e. during shifts and between shifts).

Both the SPB/SFX and the FXE users have shared access to another 7 nodes. The default expectation is that those nodes are using during the shift of the users, and usage stops at the end of the shift (so that the other experiment can start using the machines during their shift). These are sa1-onc-01, sa1-onc-02, sa1-onc-03, sa1-onc-04, sa1-onc-05, sa1-onc-06, sa1-ong-01.

Overview of available nodes and usage policy:

| Name | Purpose |

|---|---|

sa1-onc-spb |

Reserved for SPB/SFX |

sa1-onc-fxe |

Reserved for FXE |

sa1-onc-01 to sa1-onc-06 |

Shared between FXE, SPB use only during shifts |

sa1-ong-01 |

shared between FXE, SPB GPU: Tesla V100 (16GB) |

These nodes do not have access to the Internet.

The name sa1-onc- of the nodes stands for SAse1-ONlineCluster.

SASE 2¶

Beamtime in SASE 2 is shared between the MID and the HED instruments, with alternating shifts: when the MID shift stops, the HED shift starts, and vice versa.

Within SASE2, there is one node reserved for the MID experiments (sa2-onc-mid), and one node is reserved for the HED experiments (sa2-onc-hed). These can be used by the groups at any time during experiment period (i.e. during shifts and between shifts).

Both the MID and the HED users have shared access to another 7 nodes. The default expectation is that those nodes are using during the shift of the users, and usage stops at the end of the shift (so that the other experiment can start using the machines during their shift). These are sa2-onc-01, sa2-onc-02, sa2-onc-03, sa2-onc-04, sa2-onc-05, sa2-onc-06, sa2-ong-01.

Overview of available nodes and usage policy:

| Name | Purpose |

|---|---|

sa2-onc-mid |

Reserved for MID |

sa2-onc-hed |

Reserved for HED |

sa2-onc-01 to sa2-onc-06 |

Shared between MID, HED use only during shifts |

sa2-ong-01 |

Shared between HED, MID GPU: Tesla V100 (16GB) |

These nodes do not have access to the Internet.

The name sa2-onc- of the nodes stands for SAse2-ONlineCluster.

SASE 3¶

Beamtime in SASE 3 is shared between the SQS and the SCS instruments, with alternating shifts, when the SQS shift stops, the SCS shift starts, and vice versa.

Within SASE3, there is one node reserved for the SCS experiments (sa3-onc-scs), and one node is reserved for the SQS experiments (sa3-onc-sqs). These can be used by the groups at any time during experiment period (i.e. during and between shifts).

Both SASE3 instrument users have shared access to another 7 nodes. The default expectation is that those nodes are used during users shift, and usage stops at the end of the shift (so that the other experiment can start using the machines during their shift). These are sa3-onc-01, sa3-onc-02, sa3-onc-03, sa3-onc-04, sa3-onc-05, sa3-onc-06, sa3-ong-01.

Overview of available nodes and usage policy:

| Name | purpose |

|---|---|

sa3-onc-scs |

Reserved for SCS |

sa3-onc-sqs |

Reserved for SQS |

sa3-onc-01 to sa3-onc-06 |

Shared between SCS, SQS use only during shifts |

sa3-ong-01 |

Shared between SCS, SQS GPU: Tesla V100 (16GB) |

These nodes do not have access to the Internet.

The name sa3-onc- of the nodes stands for SAse3-ONlineCluster.

Note that the usage policy on shared nodes is not strictly enforced. Scientists across instruments should liaise for agreement on usage other than specified here.

Access to Online Cluster¶

During your beamtime, you can SSH to the online cluster with two hops:

# Replace `$USER` with your username

ssh $USER@max-exfl-display003.desy.de # 003 or 004

ssh sa3-onc-scs # Name of a specific node - see above

This only works during your beamtime, not before or after. You should connect to the reserved node for the instrument you're using, and then make another hop to the shared nodes if you need them.

Workstations in the control hutches, and dedicated access workstations in the XFEL headquarters building (marked with an X in the map below) can connect directly to the online cluster.

More Information

A map showing the location of the ONC workstations can be downloaded here

From these access computers, one can ssh directly into the online cluster nodes and also to the Maxwell cluster (see Offline Cluster). The X display is forwarded automatically in both cases.

There is no direct internet access from the online cluster possible.

Online Storage¶

The following folders are available on the online cluster for each proposal during the experiment:

| Directory | Description |

|---|---|

raw |

Data files recorded from the experiment (read-only) |

usr |

Beamtime store. Into this folder users can upload some files, data or scripts to be used during the beamtime. This folder is synchronised with the corresponding usr folder in the offline cluster. There is not a lot of space here (5TB). |

scratch |

Folder where users can write temporary data, i.e. the output of customized calibration pipelines etc. This folder is intended for large amounts of processed data. If the processed data is small in volume, it is recommended to use usr. Data in scratch is considered temporary, and will be deleted some time after your experiment. |

proc |

In some cases data is created here by near-online processing services, but if so it is only available during the beamtime, and it will be deleted afterwards. This is separate from the proc folder on the offline cluster. |

These folders are accessible at the same paths as on the offline cluster:

/gpfs/exfel/exp/$INST/$CYCLE/p$PROPOSAL_ID/{raw, usr, proc, scratch}

Warning

Your home directory on online nodes is only shared between nodes within each SASE (e.g. sa1- nodes for SPB & FXE). It is also entirely separate from your home directory on the offline (Maxwell) cluster.

To share files between the online and the offline cluster, use the usr directory for your proposal, e.g. /gpfs/exfel/exp/FXE/202122/p002958/usr. All users associated with a proposal have access to this directory.

Access to Data on the Online Cluster¶

Tools running on the online cluster can get streaming data from Karabo Bridge.

Data is also available in HDF5 files in the proposal's raw folder, and can be read using EXtra-data. But there are some limitations to reading files on the online cluster:

- You can't read the files which are currently being written. You can read complete runs after they have been closed, and you can read partial data from the current run because the writers periodically close one 'sequence file' and start a new one.

- Once runs are migrated to the offline cluster, the data on the online cluster may be deleted to free up space. You can expect data to remain available during a shift, but don't assume it will stay on the online cluster for the long term.

- Corrected detector data is not available in files on the online cluster, because the corrected files are generated after data is migrated to the offline cluster. You can get corrected data as a stream, though.

Analysis¶

Broadly speaking, there are four ways to analyse data in real-time:

- Karabo Scenes - EuXFEL control system GUI

- EXtra-foam - Online data analysis and visualisation, mainly for 2D detectors

- EXtra-metro - Processing framework enabling custom analysis

- Karabo bridge - 'proxy' interface to stream data to tools not integrated into anything else

Each of them have different tradeoffs when it comes to usability and flexibility. Karabo scenes and EXtra-foam are designed to be easy to use, but extending them can be difficult. On the other hand, EXtra-metro and writing your own tools offer the most flexibility, but it usually takes a lot more work.

Note

Given enough prior notice, custom analysis code can be created in collaboration with the users and made available for European XFEL experiments.

Jupyter¶

For instructions on using Jupyter on the Online Cluster, see: JupyterHub - Online Cluster

Karabo Scenes¶

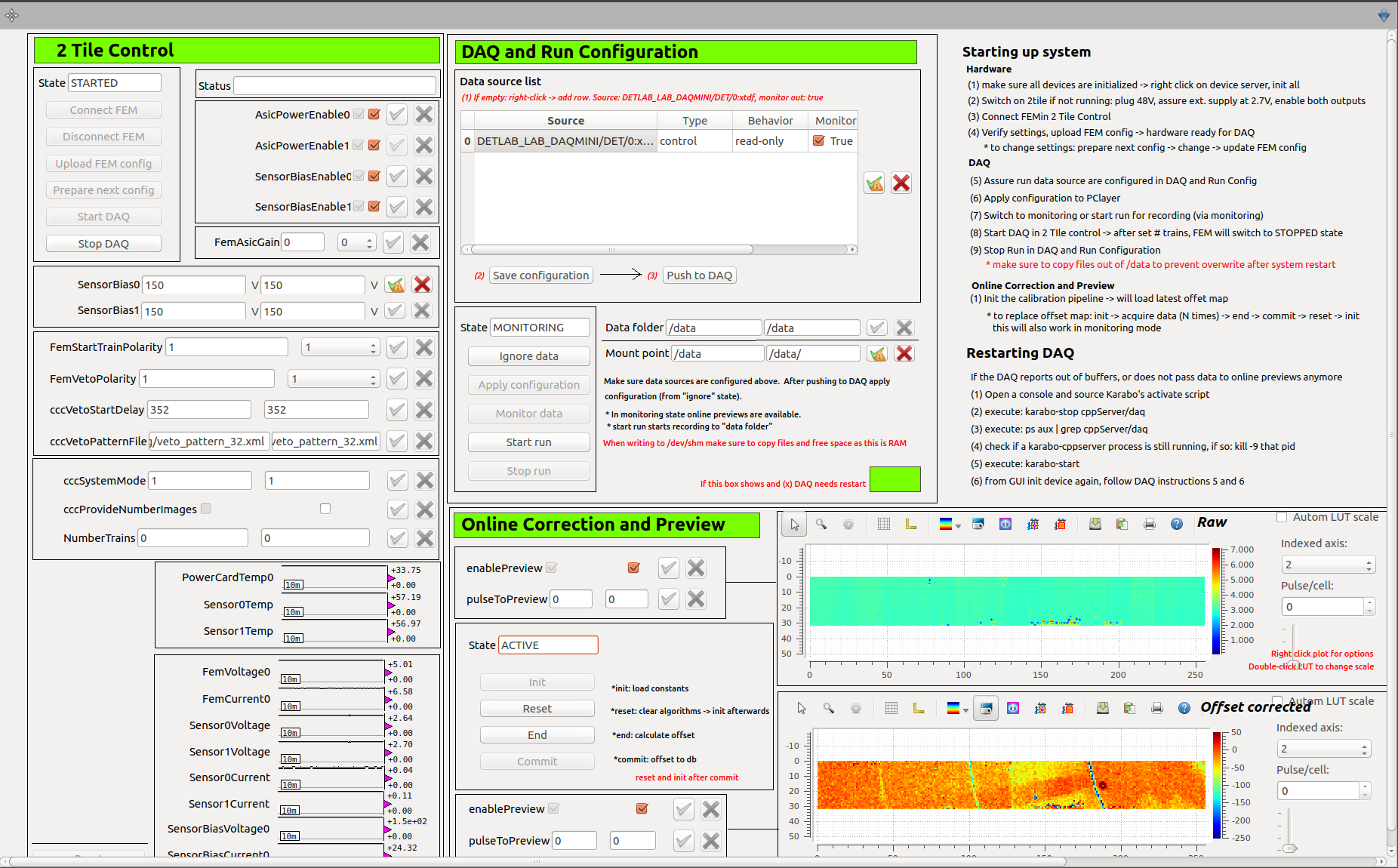

Karabo (the control system at EuXFEL) provides a customised GUI scene that displays relevant data during an experiment, such as live previews of corrected X-ray image from the detector, results from integrated data analysis pipelines, and some crucial other parameters such as train and pulse ID, beam energy, detector position, and for pump-probe experiments the pump-probe laser state, pump-probe laser delay, etc.

Specific scenes can be developed for each experiment's needs:

EXtra-foam¶

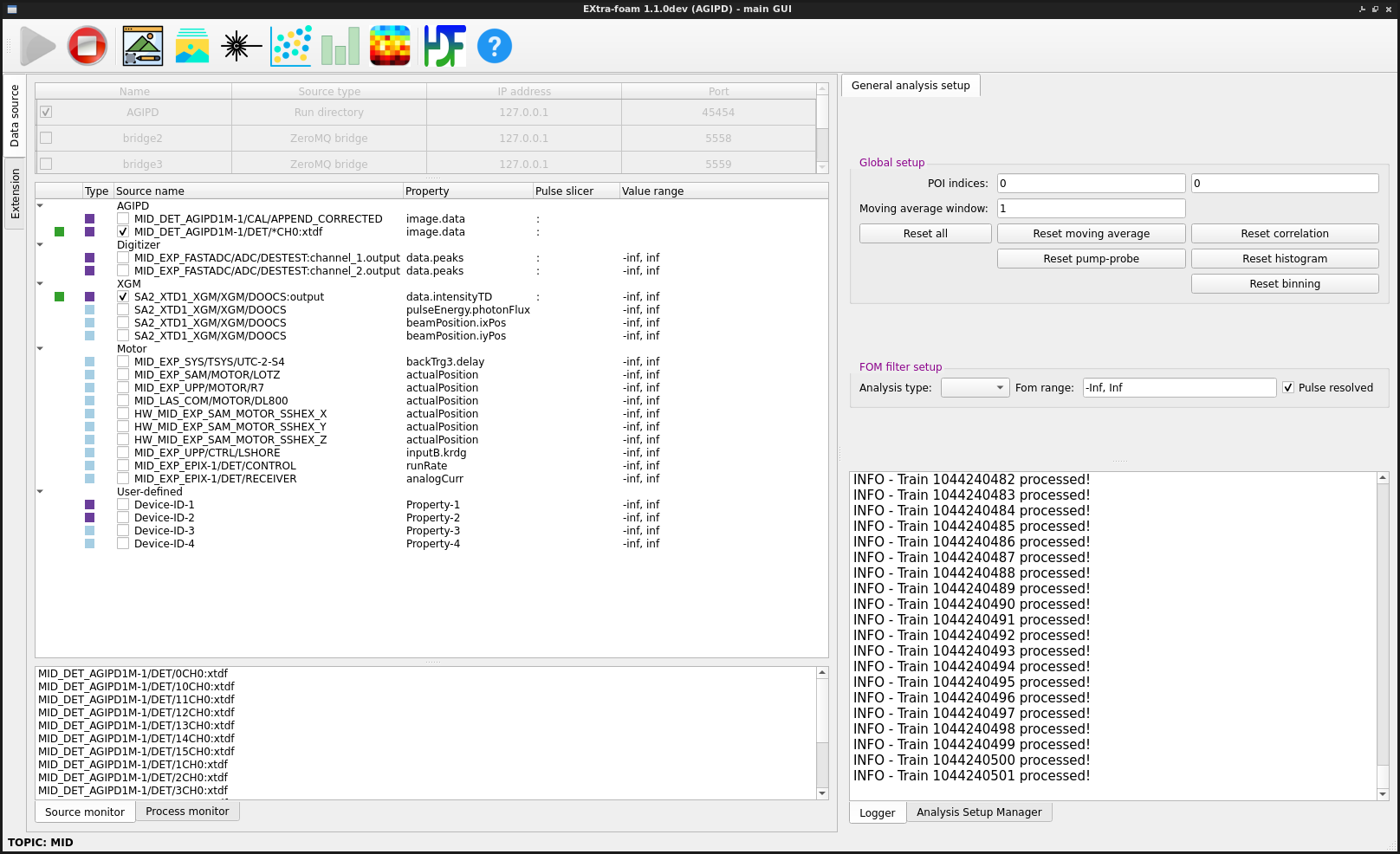

EXtra-foam is a tool that provides online data analysis and visualization for experiments, primarily for 2D detectors. Some features it provides are:

- Live preview of the detector. For multi-module detectors it is possible to specify the geometry for an accurate preview.

- Azimuthal integration.

- Analysis of ROIs on the detector, and the ability to correlate features of a ROI (e.g. intensity) with other data (e.g. time, motor position, etc).

- Recording and subtracting dark images.

It works with AGIPD, LPD, JUNGFRAU, DSSC, ePix, and any train-resolved camera (e.g. Basler/Zyla cameras, etc).

The main window of EXtra-foam, where different data sources can be selected for processing:

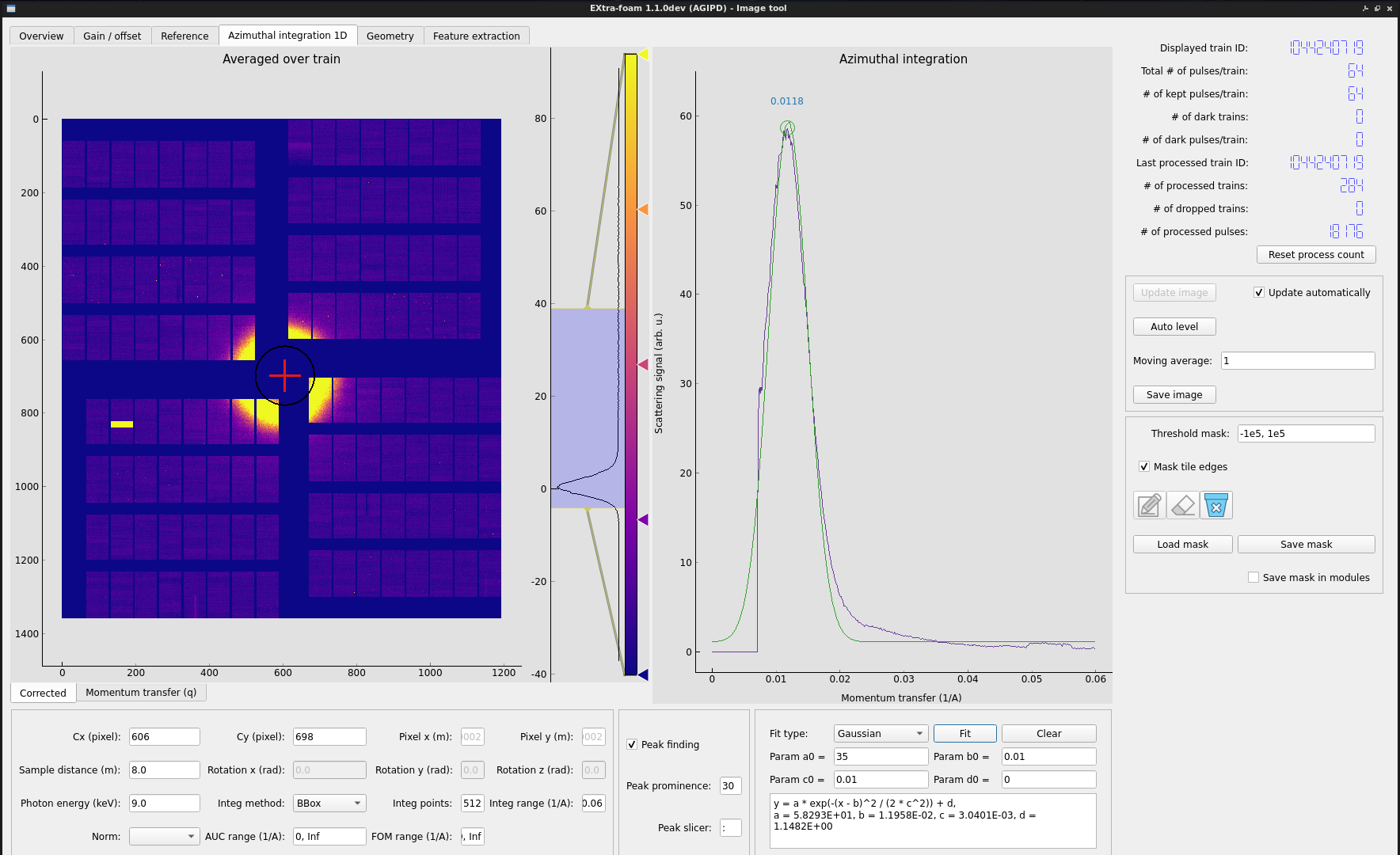

Example of computing and fitting the :math:I(q) curve:

In addition to the main program, there are separate programs called special suites that implement more specialized analysis for different experiments or instruments.

The EXtra-foam documentation has more details on its features.

EXtra-foam was designed for doing very well-defined kinds of analysis, so in some cases it's quite inflexible. If more flexibility is needed for an experiment, EXtra-metro is an option.

EXtra-metro¶

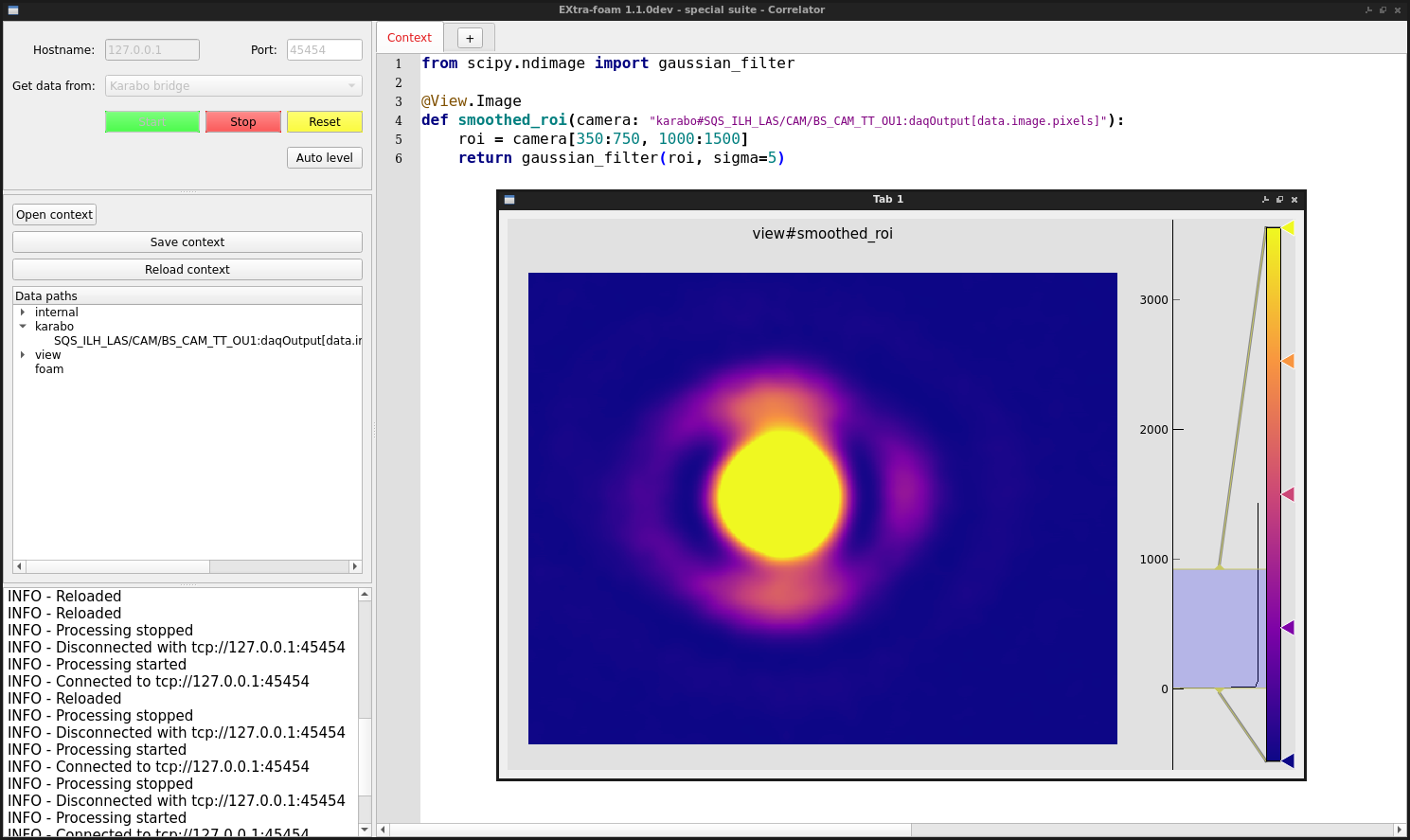

EXtra-metro is a processing framework designed to let you implement your own analysis. For example, let's say that you want to select an ROI from a camera and apply some smoothing to it:

from scipy.ndimage import gaussian_filter

@View.Image

def smoothed_roi(camera: "karabo#SQS_ILH_LAS/CAM/BS_CAM_TT_OU1:output[data.image.pixels]"):

roi = camera[350:750, 1000:1500]

return gaussian_filter(roi, sigma=5)

Here we've defined a function which takes in an ndarray of image data from a camera. It selects an ROI from the image, smooths it using a Gaussian filter from scipy, and returns the result. The function is decorated with @View.Image, which is a decorator that tells EXtra-metro that this function is a 'view' into some data, and it should be visualized as an image.

If this loaded into a program that embeds EXtra-metro and data is streamed to it, this function will be executed by EXtra-metro for each train and the results will be streamed out for visualization. For example, EXtra-foam embeds EXtra-metro in a special suite and if you run the above code you might see something like this:

Example of online analysis using EXtra-metro:

If you want to modify the analysis, such as tweak the sigma factor or add another View, it's possible to modify the code and reload it on-the-fly. This makes EXtra-metro excellent for anything that requires a lot of flexibility.

EXtra-metro has also been integrated with Karabo, so it's possible to create scenes with plots and parameters to control analysis code.

A scene with analysis implemented in EXtra-metro, but controlled and visualized through a custom Karabo scene:

Check out the EXtra-metro documentation for more information on how to write analysis code, and the MetroProcessor documentation for information on the integration with Karabo.

Streaming from Karabo Bridge¶

A Karabo bridge is a proxy interface to stream data to tools that are not integrated into the Karabo framework. It can be configured to provide any detector or control data. This interface is primarily for online data analysis (near real-time), but the extra_data Python package can also stream data from files using the same protocol, which may be useful for testing.

We provide Karabo Bridge Python and C++ clients, but you can also write your own code to receive the data if necessary. A custom client will need to implement the Karabo Bridge Protocol.

Any user tool will need to be running on the Online Cluster to access the Karabo bridge devices that are streaming data. Some instruments already have bridges running and configured for sending detector data, but if not it is possible to set them up. Please tell your contact person at the instrument if you will be bringing custom tools that require a bridge.

Note

Data from MHz detectors at the EuXFEL (AGIPD, LPD, DSSC) might have a different data shape in file and in data streams. For each train data the shape in file follows: (pulse index, slow scan axis, fast scan axis). For performance constraints, the default shape offered for this data via data stream is: (module number, fast scan, slow scan, pulse index). This configuration can be changed on demand during beamtime and be switched between 2 shapes:

- Online: (module number, fast scan, slow scan, pulse index)

- File-like: (pulse index, module number, slow scan, fast scan)

If you wish to use one or the other, communicate it to your contact person at the instrument.

Having file-like data shape online will add delay to the data stream, you might want to avoid this option if faster feedback is important for your experiment.

Simulation¶

In order to test tools with Karabo bridge, we provide a simulated server in the :mod:karabo_bridge Python package. This sends nonsense data with the same structure as real data. To start a server, run the command:

karabo-bridge-server-sim 4545

The number (4545) must be an unused local TCP port above 1024.