pyCalibration automated tests¶

Objective¶

Test available detector calibrations against picked reference data to prove the software consistency and avoid sneaky bugs that affect the produced data quality.

- Test the successful processing for executing SLURM jobs.

- xfel-calibrate CL runs successfully

- SLURM jobs are executed on Maxwell

- SLURM jobs are COMPLETED at the end.

- Test the availability of files in the out-folder

- Validate the presence of a PDF report.

- Validate the number of HDF5 files against the number of HDF5 files in the reference folder.

- Validate the numerical values of the processed data against the referenced data.

These tests are meant to run on all detector calibrations before any release. As well as run it per branch on the selected detector/calibration that is affected by the branch's changes.

Current state¶

-

Tests are defined by a callab_test.py DICT.

{ "<test-name>(`<detector-calibration-operationMode>`)": { "det-type": "<detectorType>", "cal_type": "<calibrationType>", "config": { "in-folder": "<inFolderPath>", "out-folder": "<outFolderPath>", "run": "<runNumber>", "karabo-id": "detector", "<config-name>": "<config-value>", ...: ..., }, "reference-folder": "{}/{}/{}", } ... } -

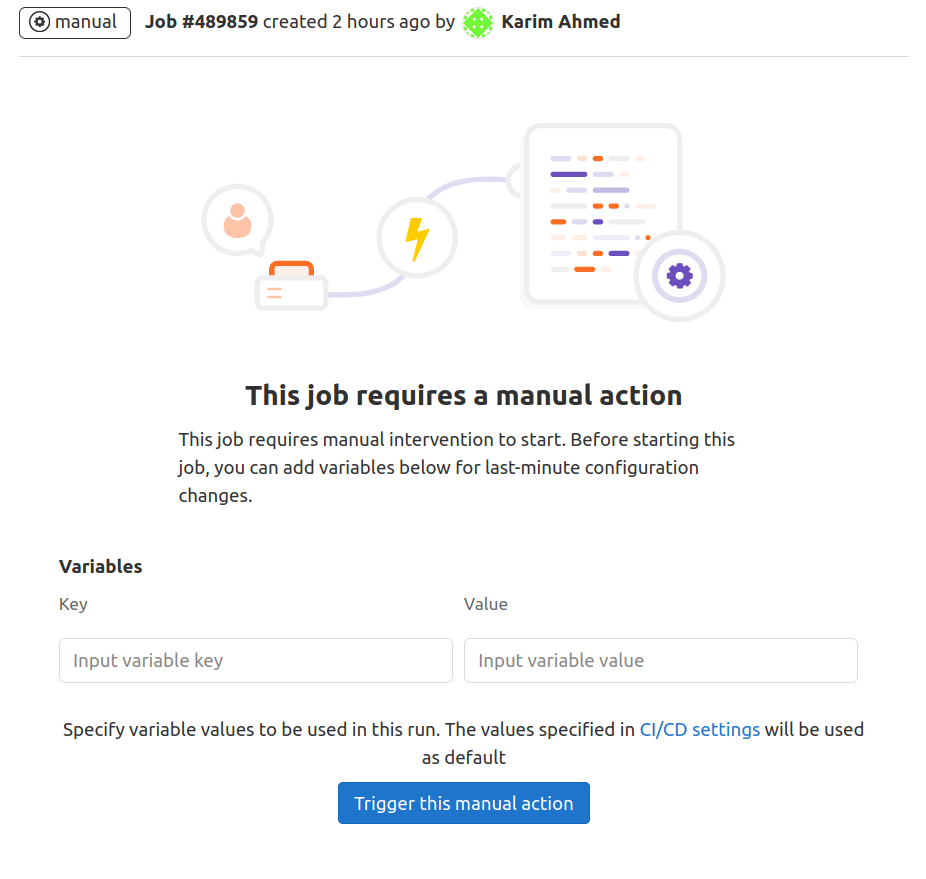

Test are triggered using GitLab manual trigger CI pipeline.

After opening a merge request on GitLab the CI initiates the unit tests pipeline automatically. After these tests are finished, you get an option to trigger a manual test.

Using this Gitlab feature you can run the tests with no configuration, this will result in running all the runs for the automated tests. This is usually good if the change is effecting all detectors and all calibration notebooks. Or before deploying new releases.

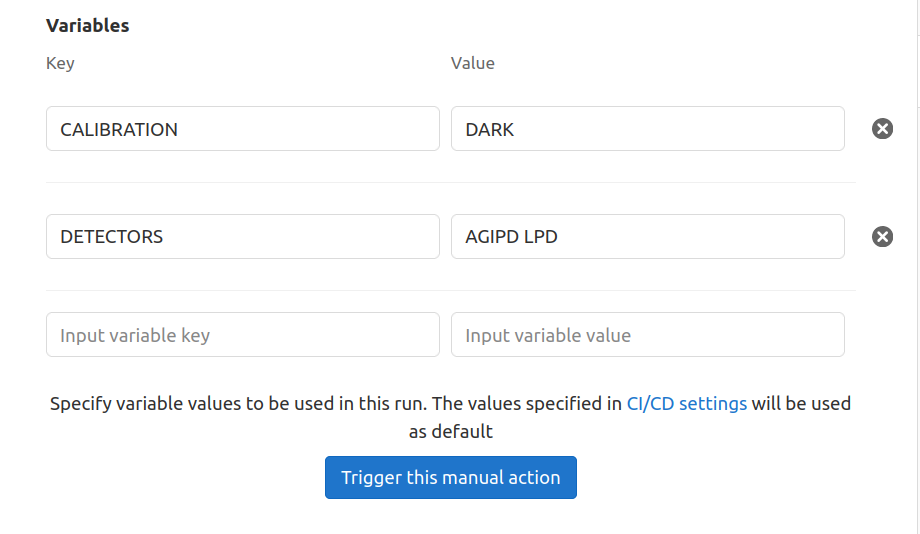

Otherwise, you can configure the test to a specific CALIBRATION (DARK or CORRECT) or/and configure the list of detectors to run the tests for.

Warning

It is not recommended to run multiple automated tests on more than a merge request at the same time. As these test are memory consuming and all tests run on the same test node.

The GitLab test pipeline jobs progress report is used to collect useful information about the test result and the reason of failure for any of the tested calibration runs.

- Tests are triggered using CLI locally:

pytest tests/test_reference_runs/test_pre_deployment.py \

--release-test \

--reference-folder <reference-folder-path> \

--out-folder <out-folder-path>

-

Arguments:

- required arguments:

- release-test: this is needed to trigger the automated test. To avoid triggering this as a part of the Gitlab CI this boolean was created.

- reference-folder: Setting the reference folder path. The reference folder is expected to have exactly the same structure as the out-folder. Usually the reference folders are out-folder from previous successful tested releases.

- out-folder: The output folder paths for saving the test output files.

- The structure is

<detector>/<test-name>/[PDF, HDF5, YAML, SlurmOut, ...]

- The structure is

- optional arguments:

- picked-test: this can be used to only run the tests for one

only. - calibration: this can be used to pick only one calibration type to run the test for. [dark or correct]

- detector: this can be used to pick detectors to run the test for and skip the rest.

- no-numerical-validation: as the numerical validation is done by default. This argument can be used to skip it and stop at executing the calibration and making sure that the SLURM jobs were COMPLETED.

- validation-only: in case the test output and reference files were already available and only a validation check is needed. this argument can be used to only run the validation checks without executing any calibration jobs.

-

Below are the steps taken to fully test the calibration files:

- Run

xfel-calibrate DET CAL ..., this will result in Slurm calibration jobs. - Check the Slurm jobs state after they finish processing.

- Confirm that there is a PDF available in the output folder.

- Validate the HDF5 files.

- Compare the MD5 checksum for the output and reference.

- Find the datatsets/attributes that are different in both files.

In case a test fails the whole test fails and the next test starts.